Last week, I visited Paris as a guest of UNESCO’s International Conference on Language Technologies (#LT4All). It was a large gathering of language practitioners — from linguists to teachers to tech gurus and other executives — under one roof to share ideas, discuss obstacles, and showcase current activities in the sphere of language technologies. The theme “Enabling Linguistic Diversity and Multilingualism Worldwide” was part of the framework of the 2019 International Year of Indigenous Languages.

It was my first time in the city and in the country (since layovers don’t count).

The conference was co-sponsored by Google (as a Founding Private Sponsor), The Government of the Khanty-Mansiysk Autonomous Okrug-Ugra (as a Founding Public Donor), UNESCO, Japan, France, and NSF as public donors, and others like Facebook, Systran, Microsoft, Amazon Alexa, Mozilla, IBM Research AI, etc, as regular sponsors. Team leaders from many of these companies were around to speak and share ideas from their ongoing work. It was a delight to be able to listen to many of them, and make connections. I met, for the first time, Daan van Esch, who leads Google’s GBoard global efforts, and with whom I’ve worked in some capacity on these efforts while I worked at Google on some Nigerian language projects. His presentation was about GBoard and how it has empowered more people to write properly in their languages on mobile devices.

I also made acquaintance with Craig Cornelius who has done some work for the consortium, but now works at Google as a Senior Software Engineer. This was during a panel on Unicode where I mentioned the fact that Yorùbá writing on the internet has suffered greatly because of Unicode’s inscrutable decision not to allow pre-composed characters. Because Yorùbá diacritics are usually both on top of the vowel and beneath it, one usually has to find so many different Unicode characters to match before one properly tone-marked character can be typed. Beyond the fact that this would be a nightmare for someone having to type a whole passage (or a novel — imagine!), it is usually often still impossible to find the right combinations. And when one manages to find the combinations, the difference between how one computer system or word processor codes its software often makes it impossible for the text to remain readable by a second or third party. I encounter this problem every day while working on the catalogue at the British Library where many of the Yorùbá books listed there appear in a variety of fonts in the BL system, some of which make the titles unreadable or with a different intended meaning.

GBoard has mitigated some of these problems. In Yorùbá on the GBoard app, for instance, we now have pre-composed characters like ọ̀ and ọ́ and ọ and ẹ and ẹ́ and ẹ̀, etc, which can be inserted instantly without any secondary combinations. What we need, as I said during the subsequent informal conversation about the subject, is something like that for Unicode so that every new computer user does not have to spend valuable time doing diacritic permutations from the Insert>Symbol field. Or for browsers (Chrome, Explorer, Mozilla, Safari, etc), so we can stop waiting for Unicode to change its ways.

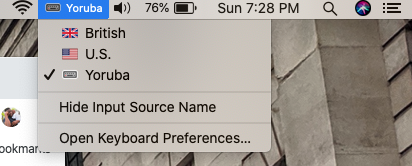

In 2016, through the Yorùbá Names Project that I founded, we created a free tonemarking software for Yorùbá and Igbo, for Mac and Windows, which has been very helpful in writing on the computer — and with which I have typed all the diacritics in this post. It still combines character elements, however, but it is software-keyboard-based, and a lot more intuitive.

Its limitations show up when a document typed with the software has to be read with another program (like Adobe or Microsoft Word, then the cycle begins again). It would be helpful if the functionality of this nature already came with the computer so there is uniformity. Imagine if every computer sold in Nigeria already suggests to the user to flip the language as I do above so that the keyboard automatically allows for diacritic markings that can transmit across different programs. That would be great, won’t it? The conversation with these gentlemen convinced me that it is doable, but would take time, and different companies coming together to agree that African languages matter on these platforms. It has not always been the case.

One of the other language products that was showcased there was Woolaroo, created in conjunction with Google Arts & Culture, which is a crowdsourcing visual dictionary for a small Australian language. When publicly launched, users will be able to take photos, and then use that photo to submit words for items in the image, which is then sent to a database and shared with other users. For languages with few speakers, but whose speakers use the tools of technology, it is one way of eliciting lexical items without having to do the physical fieldwork that has characterized most language documentation efforts in the past. There is significance for this type of approach for languages in Nigeria, for instance, where old people who know the name of items are not literate to write, but can perhaps be made to use the visual aid of phones to contribute as much as possible while they are still alive.

There were other Nigerians, and Africans, at the conference. I met Dr. Túndé Adégbọlá of the African Language Technology Initiative (ALT-i), Àbákẹ́ Adénlé of AJA.LA Studios, Professor Chinedu Uchechukwu of the Nnamdi Azikiwe University in Awka, Nigeria, Professor Sunday Òjó, and Adama Samassekou (the founder of the African Academy of Languages), among others. It was a diverse group of people working in different aspects of language revitalization, technology, and documentation. Mark Liberman, whom I was also meeting for the first time, shared my concern about Unicode, having done some work himself in Nigerian languages, and been frustrated by the problem of finding the right diacritics in a simple and accessible way.

Paris is beautiful at night — perhaps much better looking at night actually. The monuments are lit up, and the beauty of the city shines out from within the glow. The language of the city, naturally, is French, but the tourist who speaks not more than a smattering of the language doesn’t run into much of a problem.

It was cold most of the time, which made walking around a bit of an ordeal. It reminds me a lot of the other global city I once attempted to walk around in 2009. A day before I arrived in Paris, there had been a massive strike that paralysed the entire country and rendered public transportation useless. This could explain why Uber appeared a lot more expensive that I’d experienced elsewhere. Would have been nice to see how different the Metro was from the Tube in England. But the strike also meant that the city was less crowded — at least the usually touristic areas — and the public trash cans seemed always in need of emptying.

I had got a travel grant of £1,011 to attend the conference — which covered my visa (~£295), hotel (~£270), food (~63), train (~£500), and Ubers (~£81.77) and was helpful and convenient, especially since I had to pay for the highest end of many of these things due to the rushed arrangement. My visa was issued on the 4th, so I was only able to attend the sessions on the 5th and 6th, leaving the city in the evening of the 7th. Still, it was enough to take in the fine city, sample the food, make connections, and make future plans to return for more adventure.

The train ride on the Eurostar from St. Pancras to Gare du Nord, which took just under three hours, is a story of its own.

2 Comments to Two Nights in Paris so far. (RSS Feeds for comments in this post)